Methods/Statistics

Comparison of disease risk score methods to study treatment effect heterogeneity: a simulation study Haedi Thelen* Haedi Thelen Wei Yang Sean Hennessy Jordana Cohen Wensheng Guo Todd Miano

Background: Estimation of treatment effects across levels of predicted baseline disease risk (i.e., the disease risk score (DRS)) is a popular method for evaluating treatment effect heterogeneity in randomized trials (RCTs). Although prior studies suggest that trial data can be used for DRS derivation, the optimal approach to using data to both fit DRS models and estimate treatment effects is uncertain. This simulation compares three approaches to fitting DRS models in RCT data.

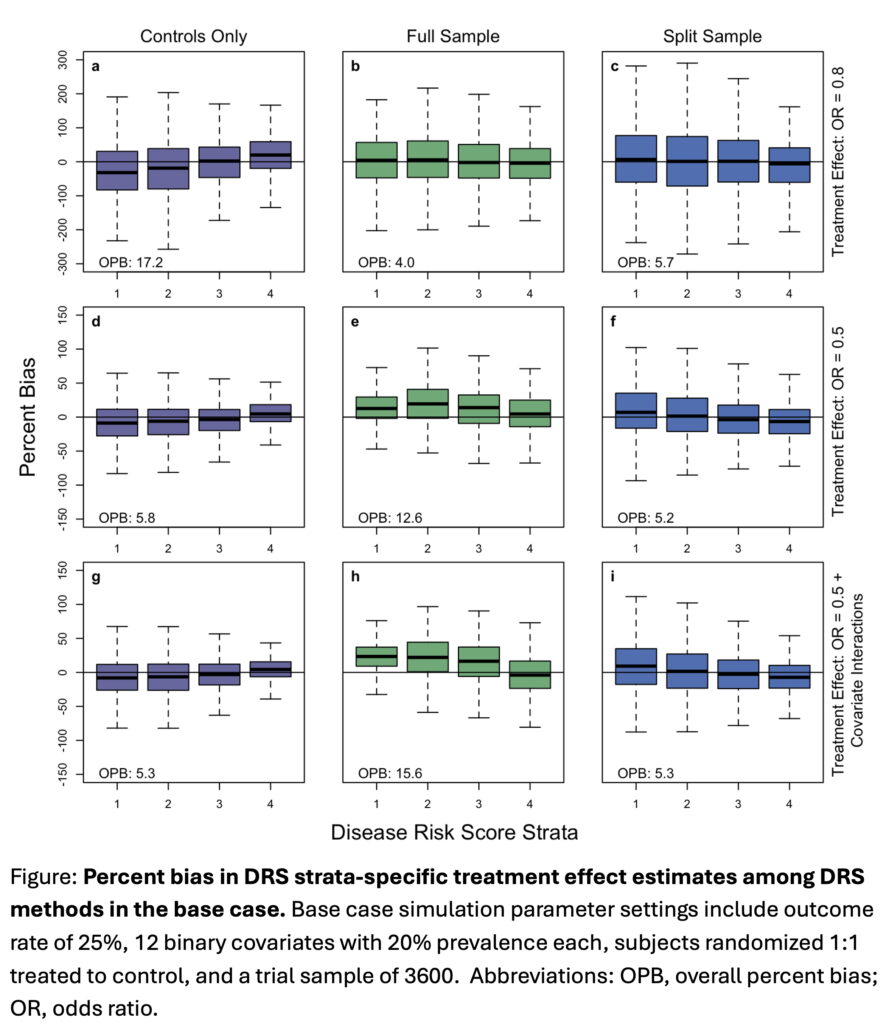

Methods: We simulated repeated RCTs of a binary treatment by drawing samples of 3600 from a population of one million subjects with 12 binary covariates. We evaluated ground truth treatment effects of OR=1, 0.8, 0.5, and 0.5 with treatment-covariate interactions on the OR scale. In each scenario, we fit a DRS model on 1) controls only (CO), 2) the full-sample (treated and controls (FS)), and 3) a 50% random sample of controls, who were then removed from the sample for treatment effect estimation (split-sample (SS) method). We estimated treatment effects within strata of predicted DRS on the risk difference scale. We calculated within strata percent bias and overall percent bias (OPB), defined as the average absolute percentage bias across strata. We repeated simulations varying outcome incidence, trial sample size, and randomization ratio (treated to control 1:1 and 2:1).

Results: In the base case with null treatment effect, the CO method was substantially biased, while FS and SS exhibited reduced bias. Bias increased with the FS method as treatment effect increased, and when there were treatment-covariate interactions (Figure, panels b, e, h); whereas the SS remained minimally biased (panels c, f, i). Bias with the FS method was greatest with uneven randomization (OPB 21.4%).

Conclusion: These findings encourage re-evaluation of existing guidance in favor of split-sample methods which were less biased across studied scenarios.